Google today announced that it has teamed up with the Hadoop specialists at Cloudera to bring its Cloud Dataflow programming model to Apache’s Spark data processing engine.

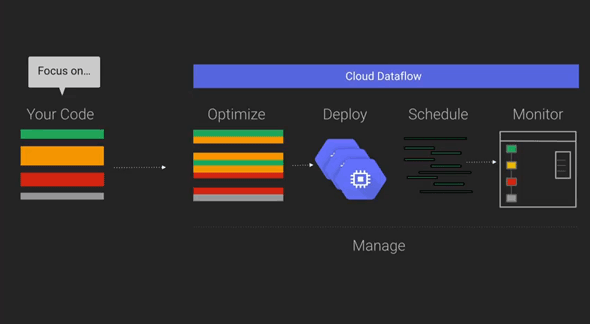

With Google Cloud Dataflow, developers can create and monitor data processing pipelines without having to worry about the underlying data processing cluster. As Google likes to stress, the service evolved out of the company’s internal tools for processing large datasets at Internet scale. Not all data processing tasks are the same, though, and sometimes you may want to run a task in the cloud or on premise or on different processing engines. With Cloud Dataflow — in its ideal state — data analysts will be able use the same system for creating their pipelines, no matter the underlying architecture they want to run them on.

Google first announced Dataflow as a hosted service on its own platform last summer that relied on Google’s own Compute Engine, Cloud Storage and BigQuery services. Exactly a month ago, the company opened up a Java SDK for the service to help developers integrate it into other languages and environments. Now, with the help of Cloudera, it is doing exactly that in the form of an open source Dataflow “runner” for Spark. With this, developers can now run Cloud Dataflow on their own local machines, on Google’s hosted service (which is still in private alpha testing) and on Spark.

The Spark version is now available on GitHub. Cloudera considers it to be an “incubating project” that’s meant for testing and experimentation only — so if you want to run it in production, you do so at your own risk. Google also still considers Dataflow an alpha project, too, so the SDK could still change a bit.

No comments:

Post a Comment